Melbourne, as the most Nathan Barley of Australian cities, so easily lampooned for its population of bushranger bearded baristas with half-baked app ideas, makes a strong argument for being Australia’s Portland. Perfectly placed then, for reviewing Lumen – new real-time visual software coded by Jason Grlicky in downtown Portland, which tries to add some contemporary twists to the quirky history of video synthesis.

What is Lumen?

A mac based app (needing OSX 10.8 or later) for ‘creating engaging visuals in real-time’… with a ‘semi-modular design that is both playable and deep.. the perfect way to get into video synthesis.’ In other words – it’s a software based video synthesiser, with all the noodling, head-scratching experiments and moments of delightful serendipity this implies. A visual synthesiser – that can build up images from scratch, then rhythmically modify and refine them over time. It has been thoughtfully put together though, so despite the range of possibilities – it’s also very quickly ‘playable’ – and always suggesting there’s plennnnttttyyyy of room to explore.

The Lumen Interface

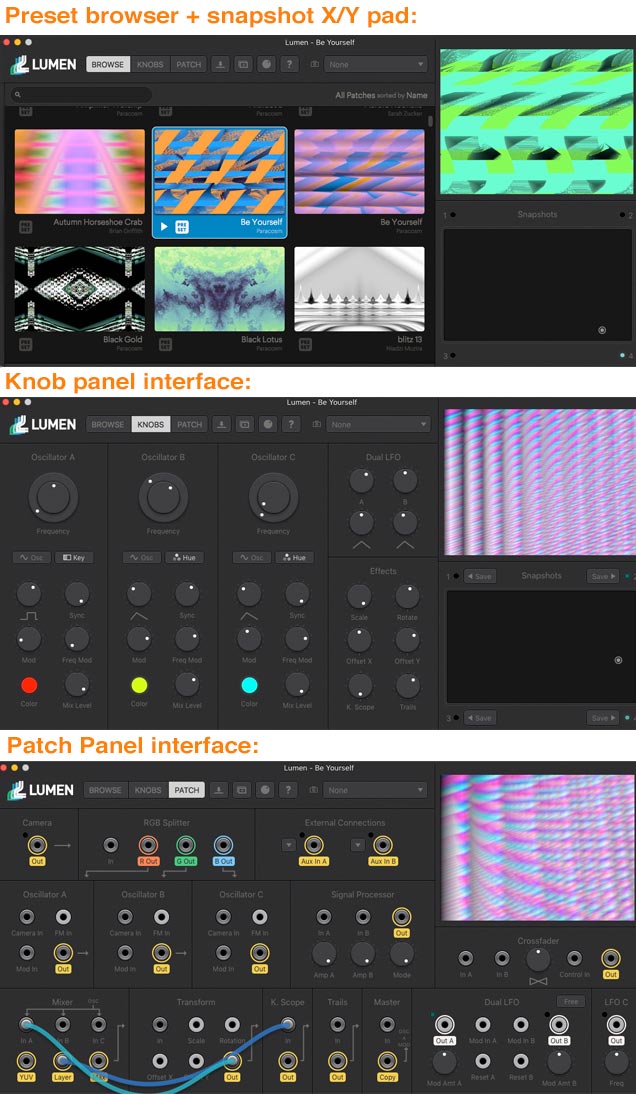

While the underlying principles of hardware based video synthesisers are being milked here to good effect – a lot of the merits of Lumen are in the ways they’ve managed to make these principles easily accessible with well considered interface design. It has been divided into 3 sections – a preset browser (which also features a lovely X/Y pad for interpolating between various presets), a knob panel interface, and a patch panel interface. It’s a very skeuomorphic design, but it also cleverly takes the software to places where hardware couldn’t go (more on that later).

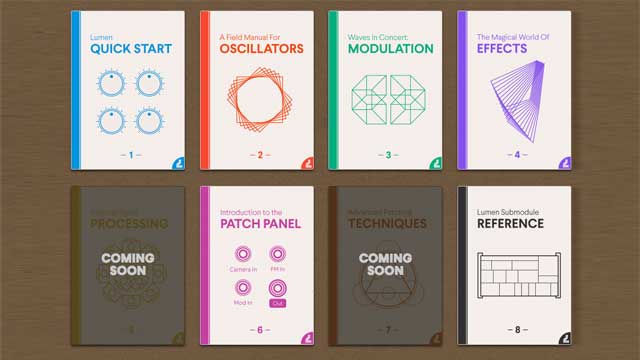

What should be evident in those screengrabs, is that experimentation is easy- and there’s a lot of depth to explore. The extensive reference material helps a lot with the latter. And as you can see, they can’t help but organise that beautifully on their site:

Lumen Presets

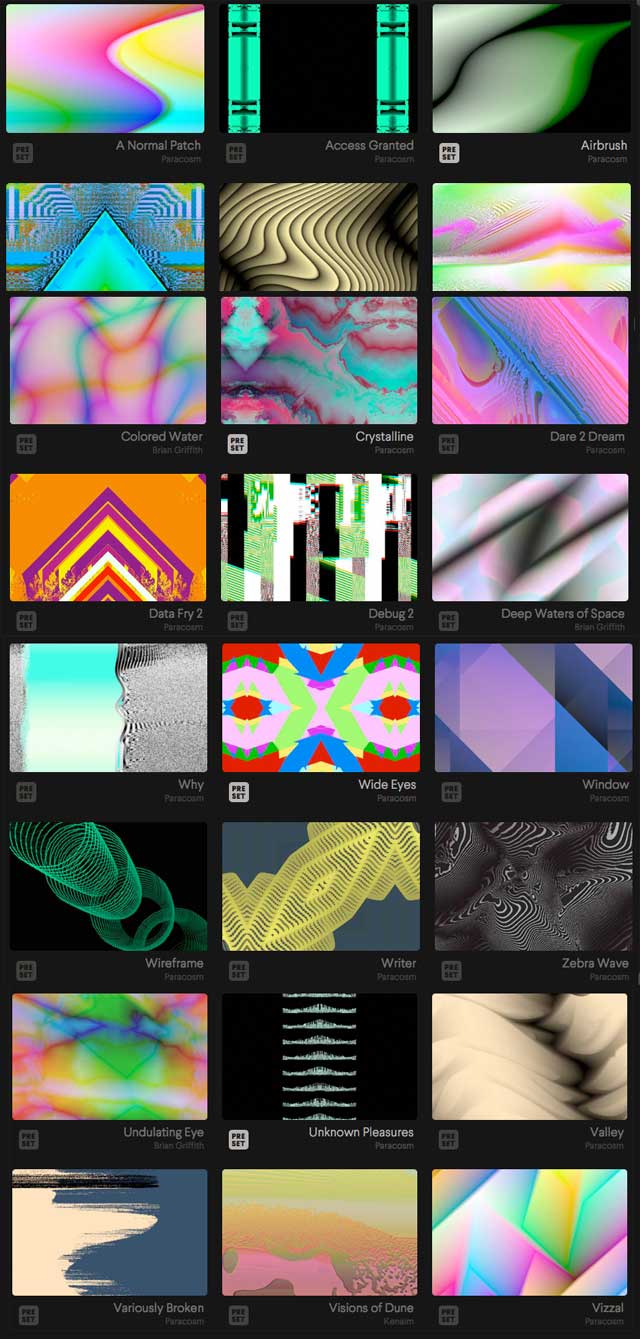

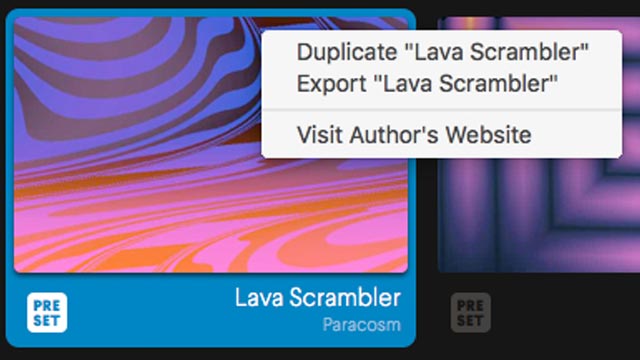

Lumen comes pre-loaded with 150+ presets, so it’s immediately satisfying upon launch, to be able to jump between patches and see what kind of scope and visual flavours are possible.

… and it’s easy to copy and remix presets, or export and swap them – eg on the Lumen slack channel.

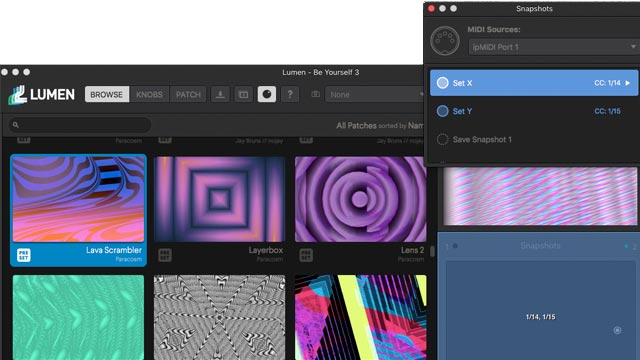

Midi, OSC + Audioreactivity

Although all are planned, only midi exists in Lumen so far, but it’s beautifully integrated. With a midi controller (or a phone/tablet app sending OSC to a midi translating app on your computer) – Lumen really comes into it’s own, and the real-time responsiveness can be admired. Once various parameters are connected via midi control, those of course can effectively be made to be audioreactive, by sending signals from audioreactively controlled parameters in other software. Native integration will be nice when it arrives though.

Video Feedback, Software Style

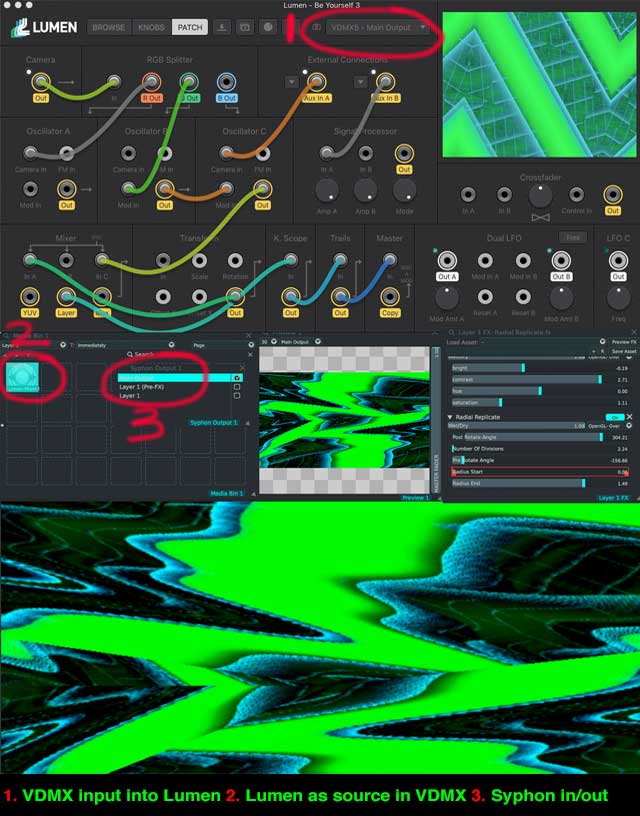

Decent syphon integration of course opens a whole range of possibilities…. Lumen’s output can be easily piped into software like VDMX or COGE for use as a graphic source or texture, or mapping software like madmapper. At the moment there are some limitations with aspect ratios and output sizes, but that’s apparently being resolved in a near-future update.

With the ability to import video via syphon though, Lumen can reasonably considered as an external visual effects unit. Lumen can also take in camera feeds for processing, but it’s the ability to take in a custom video feed that can make it versatile – eg video clips created for certain visual ideas, or the output of a composition in a mapping program.

This screengrab below shows the signal going into Lumen from VDMX, and also out of lumen back into VDMX. Obviously, at some point this inevitably means feedback, and all the associated fun/horror.

Also worth a look: Lumen integration with VDMX – post by artist Wiley Wiggins – and accompanying video tutorial (including a nice top to use osculator as a means of enabling touchosc finger-gliding control over the square pad interpolation of Lumen snapshots).

Requirements:

- macOS 10.8 or newer (Each license activates two computers)

- $129US

VERDICT

There’s an army of lovers of abstracted visuals that are going to auto-love Lumen, but it has scope too for others looking for interesting ways to add visual textures, and play with real-time visual effects on video feeds. It could feasibly have an interesting place in a non-real-time video production pipeline too. Hopefully in a few years, we’ll be awash in a variety of real-time visual synthesis apps, but for now Lumen is a delightfully designed addition to the real-time video ecosystem.

Interview with Lumen creator, Jason Grlicky

– What inspired you to develop Lumen?

I’ve always loved synthesizers, but for most of my life that was limited to audio synths. As soon as I’d heard about video synthesis, I knew I had to try it for myself! The concept of performing with a true video instrument – one that encourages real-time improvisation and exploration – really appeals to me.

Unfortunately, video synths can be really expensive, so I couldn’t get my hands on one. Despite not being able to dive in (or probably because of it), my mind wouldn’t let it go. After a couple failed prototypes, one morning about I woke up with a technical idea for how I could emulate the analog video synthesis process in software. At that point, I knew that my path was set…

– When replicating analogue processes within software – what have been some limitations / happy surprises?

There have been so many happy accidents along the way. Each week during Lumen’s development, I discovered new techniques that I didn’t think would be possible with the instrument. There are several presets that I included which involve a slit-scan effect that only works because of the specific way I implemented feedback, for instance! My jaw dropped when I accidentally stumbled on that. I can’t wait to see what people discover next.

My favorite part about the process is that the laws of physics are just suggestions. Software gives me the freedom to deviate from the hardware way of doing things in order to make it as easy as possible for users. The way that Lumen handles oscillator sync is a great example of this.

Can you describe a bit more about that freedom to deviate from hardware – in how Lumen handles oscillator sync?

In a traditional video synth oscillator, you’ll see the option to sync either to the line rate or to the vertical refresh rate, which allows you to create vertical or horizontal non-moving lines. When making Lumen, I wanted to keep the feeling of control as smooth as possible, so I made oscillator sync a knob instead of a switch. As you turn it clockwise, the scrolling lines created by the oscillator slow down, then stop, then rotate to create static vertical lines. It’s a little thing, but ultimately allows for more versatile output and more seamless live performance than has ever been possible using hardware video synths.

Were there any other hardware limitations that you were eager to exploit the absence of within software?

At every turn I was looking for ways to push beyond what hardware allows without losing the spirit of the workflow. The built-in patch browser is probably the number-one example. Being able to instantly recall any synth settings allows you to experiment faster than with a hardware synth, and having a preset library makes it easier to use advanced patching techniques.

The Snapshots XY- Pad, Undo & Redo, and the Transform/K-Scope effects are all other examples of where we took Lumen beyond what hardware can do today. Honestly, I think we’re just scratching the surface with what a software video instrument can be.

How has syphon influenced software development for you?

I had an epiphany a couple years back where I took a much more holistic view of audio equipment. After using modular synths for long enough, I realized that on a certain level, the separation between individual pieces of studio equipment is totally artificial. Each different sound source, running through effects, processed in the mixer – all of that is just part of a larger system that works together to create a part of a song. This thinking led me to create my first app, Polymer, which is all about combining multiple synths in order to play them as a single instrument.

For me, Syphon and Spout represent the exact same modular philosophy – the freedom to blend the lines between individual video tools and to treat them as part of a larger system. Being able to tap into that larger system allowed me to create a really focused video instrument instead of having to make it do everything under the sun. Thanks to technologies like Syphon, the future of video tools is a very bright place!

What are some fun Lumen + Syphon workflows you enjoy – or enjoy seeing users play with?

My favorite workflow involves setting up Syphon feedback loops. You just send Lumen’s output to another VJ app like CoGe or VDMX, put some effects on it, then use that app’s output as a camera input in Lumen. It makes for some really unpredictable and delightful results, and that’s just from the simplest possible feedback loop!

What are some things you’re excited about on the Lumen roadmap ahead?

We have so many plans for things to add and refine. I’m particularly excited about improving the ways that Lumen connects with the outside world – be that via new video input types, control protocols, or interactions with other programs. We’re working on adding audio-reactivity right now, which is going to be a really fun when it ships. Just based on what we’ve seen in development so far, I expect it to add a whole new dimension to Lumen while keeping the workflow intuitive. It’s a difficult balance to strike, but that’s our mission – never to lose sight of the immediacy of control while adding new features.