Software Adventure-Time! Newest* video kid on the block = Mitti, a “modern, feature-packed but easy-to-use pro video cue playback solution for events, theatre, audiovisual shows, performances and exhibitions,” – coming from the same stable that brought us Vezer (the timeline based midi/osc/dmx sequencer) and COGE (the versatile VJ software). (By *Newest, I mean it’s been around since late 2016, but since then, Mitti has enjoyed a steady rate of notable additions and updates.)

After all the work that can go into video material for an event, playback control can sometimes be left as an afterthought – it’s not unknown to see videos being played back from video editing software and ‘live-scrubbed’, or to watch users flipping between their desktop and powerpoint / keynote / Quicktime etc. VJ software of course brings a flexibility and reliability to playback control – taking care of the basics such as fading to black, or looping upon finish of clip, cross-fading, or simply – avoiding the desktop suddenly appearing on the main projected screen. The flexibility of most VJ software is also one of it’s limitations – the strength of real-time effects and mixing, tending to make interfaces more obtuse than they need to be for users seeking simple playback. And when getting simple playback exactly right becomes important, for events, theatre, installations etc – this is where Mitti seems to be aiming at, in the ballpark of apps like Playback Pro, QLAB or perhaps Millumin, where cues and critical timing are given more priority than visual effects.

So What’s Mitti Like?

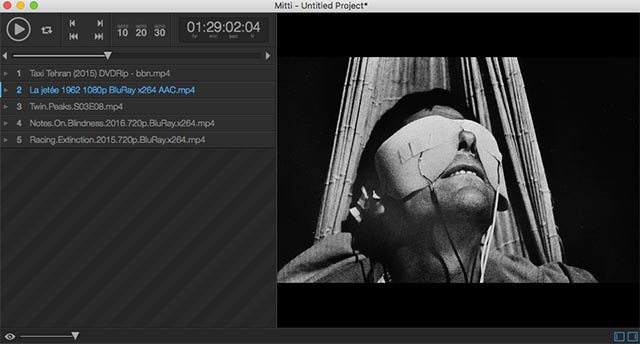

Running at it’s most minimal, with a playlist of clips, Mitti appears deceptively simple – but comes dense with custom controls at every level.

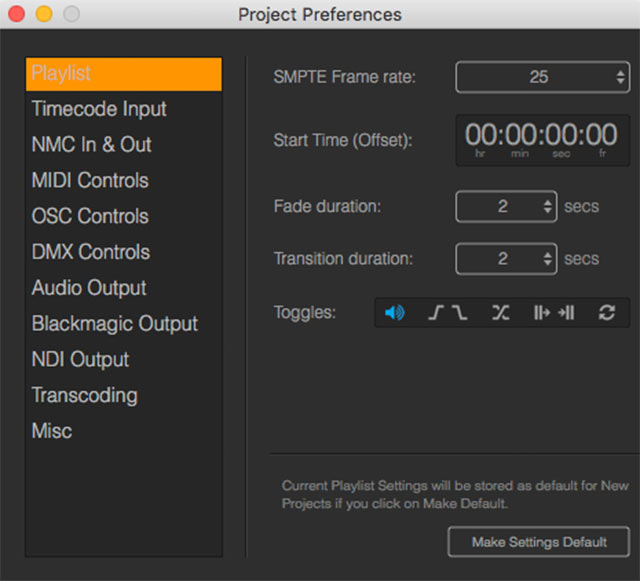

The Interface – a strength would seem to be the efforts spent in making a very clear and intuitive interface for Mitti – it comes across as clean and easy to navigate, with extended options available where they might be expected. (And for further depth – go to the control menu, choose Mitti, then Preferences, to see a very well organised array of options)

Timing – Exact timing control can be critical, and Mitti boasts low latency, a GPU playback engine, and can run from an SMPTE based internal clock, or slave to external hardware or software sources. If you know what these Mitti capacities mean, you’re possibly the intended audience: external MTC (MIDI Timecode), LTC (Linear Timecode), SMPTE offsetting, Jam-Sync.

Cues – You can create and trigger cues for videos, images, cameras (and native Blackmagic support), syphon and NDI sources – each with nuanced options, and easily add or adjust in/out points per clip. Cues can be set to loop, and the playlist can be paused between cues, until ready to start the next cue.

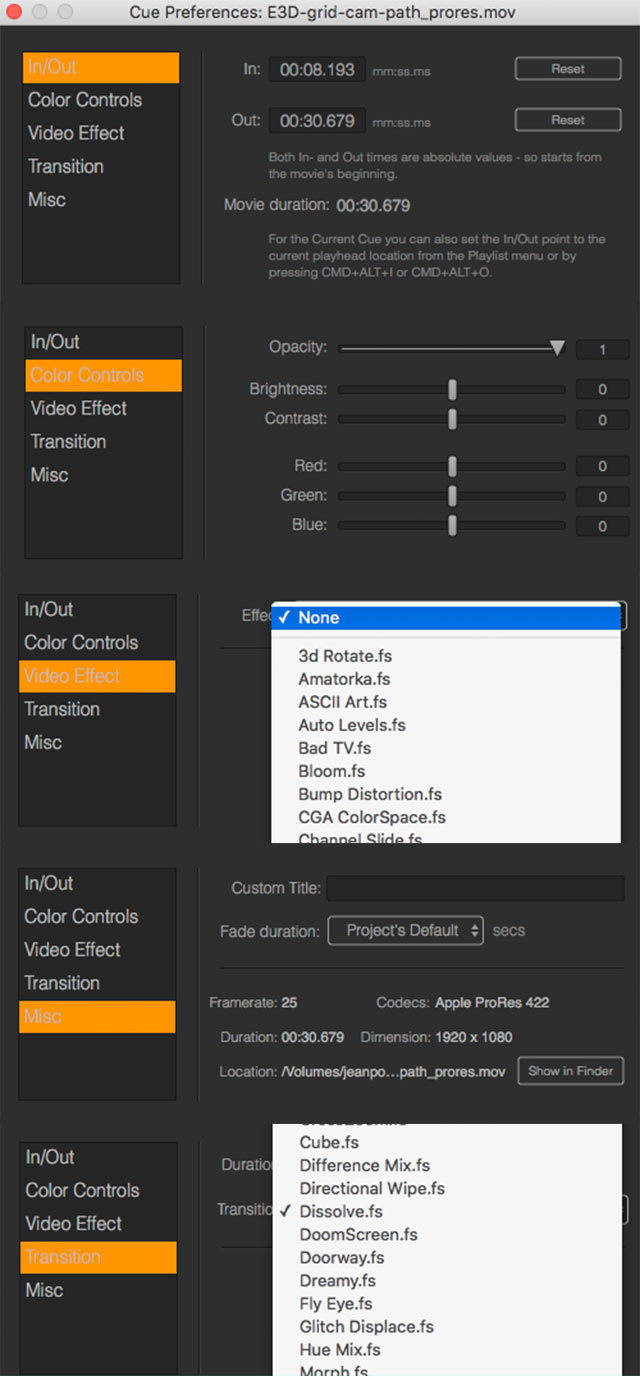

Nuanced control of fades, transitions – eg individual control per cue over fade in or outs, and over 30 ISF based video transition options

Remote Controllability + Sync – Hello MMC (Midi Machine Control), MSC (MIDI show control), hardware and software midi controllers, OSC + Art-Net (DMX over ethernet). Multiple versions of Mitti on the same network can be easily synced.

Output finessing – Includes colour controls, video effects, audio channel routing and for multiple video displays – Mitti provides individual 4-corner warping for each, and edge blending between overlapping projectors. Mitti can also output syphon, blackmagic and NDI.

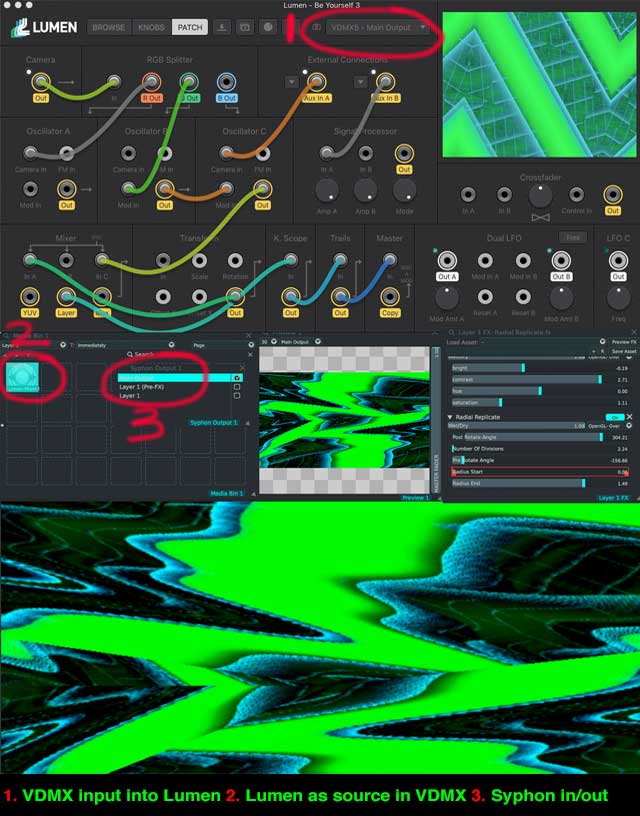

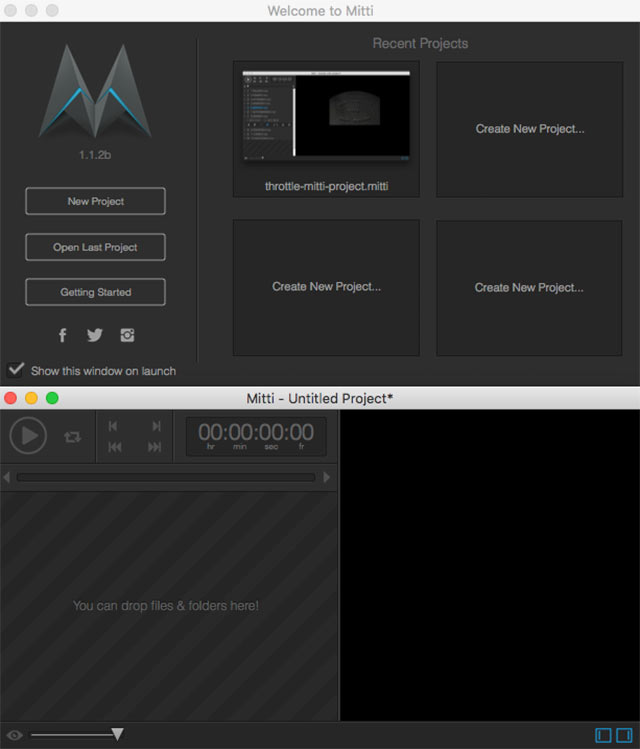

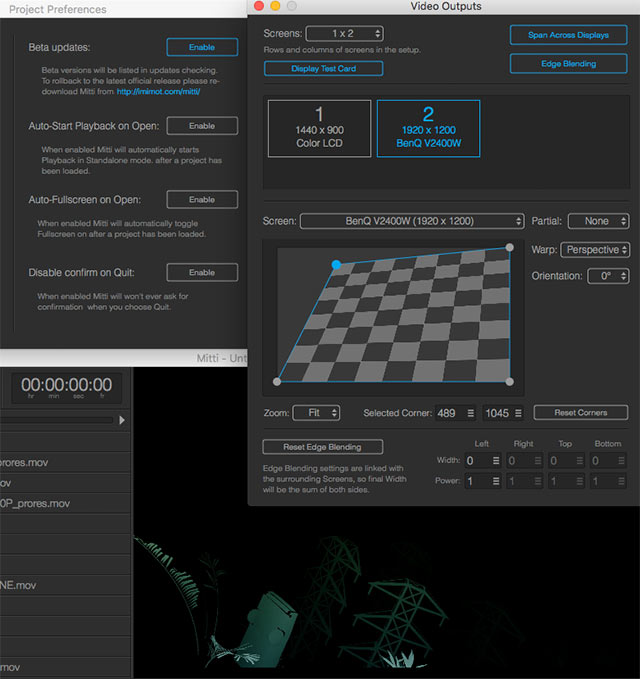

Screenshots:

Below, showing the various cue preference options:

Launch page:

Video output options:

Project Preferences, which include detailed options for each item on the left:

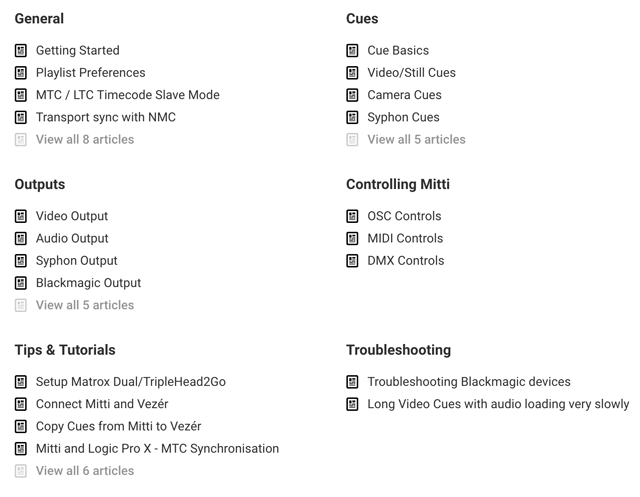

Support:

This can be important when dealing with unusual projects or computer quirks… and Imimot boast great support – “Our average first response time was only 3 hours 5 minutes in the past 7 days!”, as well as extensive FAQ / tips and support documentation:

Requirements:

A mac computer – running 10.10-10.12.

$299US for perpetual licence (for 2 computers) (30% edu discount available)

$79US rental licence for 30 days from first activation.

Verdict:

Solid, reliable software that’ll be of interest to anyone involved with running video for time-critical events, theatre and installations. Double thumbs up!

Interview with Tamas Nagy, Creator of Mitti

Tamas, below in Imimot HQ, was nice enough to answer some questions about why he made Mitti….

With the ecosystem of video software that exists – what inspired you to add MITTI to it?

The idea of creating Mitti is coming from Vezér feature requests – the funny thing with this is Vezér was also born by a couple of CoGe feature requests 🙂 A lot of Vezér users were searching …. for an any-to-use but remote controllable video playback solution, which plays nice with Vezér, or even requested video playback functionality in Vezér. Adding video playback functions to Vezér does not sound reasonable for me, the app was not designed that way, and I wanted to leave it as a “signal processes”/show control tool instead of redesigning the whole app. After doing some research I’ve found there were no app that time on the market I could offer to Vezér users which is easy to setup and use, lightweight, and controllable by various protocols that Vezér supports. So I’ve started to create one. The original plan was to make something really basic, but once I’ve started to speak about the project to my pals and acquaintances, I’ve realised there is a need for an easy to use app on the market with pro features, and by pro features I mean timecode sync, multi-output handling, capture card support.

What are some contexts you imagine MITTI being used?

Mitti is targeting the presentation, theatre, broadcast and exhibitions market, usually where reliable cue-based media playback is needed – and this is where Mitti’s current user base come from: event producer companies, theatre, visual techs of touring artists, composers working together with DAWs, etc.

What interests you about NDI?

I believe NDI is the next big thing after Syphon. Now you can share frames between computers even running different operating systems without or minimal latency, using cheap network gear or already exists network infrastructure. And there are even hardwares coming with native NDI support!

What are some interesting ways you’ve enabled Vezer+Mitti interplay?

The coolest thing is NMC I believe – our in-house network protocol which enabled sending transport and locate command thru the network. Vezér sends NMC commands by defaults and Mitti listens to them by default, which means you can make Mitti scrub on its timeline and receive start/stop commands from Vezér with no setup.

An other big thing is the OSC Query. This is not strictly Mitti and Vezér related. OSC Query is a protocol – still in draft mode yet – proposed by mrRay from Vidvox to discover an OSC-enabled app’s OSC address space. As far as I know only Mitti and Vezér supporting this protocol on the market, but hopefully others will join pretty soon, since this going to be a game changer in my opinion.

You can even copy-paste Cues from Mitti to Vezér.

Why is Mitti priced more expensive than COGE?

This is a rather complex topic, but basically Mitti has been designed to a fairly different market than CoGe. Also CoGe is highly underpriced in my opinion – well, pricing things is far more complex stuff than I imagined when CoGe hit the prime time – but that is a whole different topic.

Thanks Tamas!

(See also : COGE review and interview )

by j p, June 28, 2017 0 comments